Why Facebook Took So Long to Identify Russian Ads

On September 6, Facebook finally admitted to finding evidence pointing to Russian meddling on its platform to influence voter behaviour. In a blog post on Facebook’s press site Chief Security Officer Alex Stamos announced an internal review uncovered thousands of political ads containing incendiary messages paid for by Russian actors. “We [Facebook] found approximately $100,000 in ad spending from June 2015 to May 2017 – associated with roughly 3,000 ads – that was connected to 470 inauthentic accounts and Pages in violation of our policies.” The accounts and Pages “likely operated out of Russia,” said Stamos. Facebook’s revelation comes nearly a year after the American people elected Trump on November 8 2016, which leads to a poignant question: Why did it take Facebook so long to identify the ads and tie them back to Russia?

Trying to find ads with links to Russia can best be likened to trying to find a needle in a haystack because there were no clear points of reference to jumpstart the investigation. When considering what was spent on a single ad, the Russian spending habits didn’t reflect an unusual pattern of activity that would raise red flags. “For 50% of the ads, less than $3 was spent; for 99% of the ads, less than $1,000 was spent,” detailed Facebook’s Vice President of Policy and Communications Eliot Schrage on October 2. Meanwhile, the total amount that was found to have been spent by the Russians comprised a tiny fraction of the whopping $45.57 billion Facebook reportedly raked in from advertising in 2015 and 2016. As a result the total amount was in no way large enough to signal that a coordinated advertising campaign was being carried out by a group of accounts and Pages. Had the lump sum spent on each ad been excessive or the total amount spent been greater, the purchasing behaviour would have raised a few eyebrows at the level of a single ad or at the level of the mass of ads. But the Russians left a virtually untraceable monetary footprint.

Nor did the content of the ads cast doubt on the authenticity or origins of the ads. Unlike traditional political ads circulating on new media tend to do, Stamos disclosed:

The vast majority of ads run by these accounts didn’t specifically reference the US presidential election, voting or a particular candidate. Rather, the ads and accounts appeared to focus on amplifying divisive social and political messages across the ideological spectrum — touching on topics from LGBT matters to race issues to immigration to gun rights.

In this way the ads presented themselves as opinions and ideas on hot-topics consistent with democratic ideals of free speech and slipped past Facebook during the ad review process. The fact that Facebook approved and published the ads which were viewed by an estimated 10 million people in the U.S. implies that the ads were Facebook compliant and user-friendly and in no way violated Facebook’s community standards or advertising terms. Schrage reinforced this point by saying that “many of the ads did not violate our content policies.”

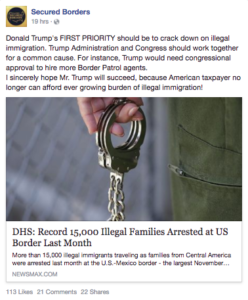

With over 1.86 billion monthly active users as of December 31, 2016 according to the 2016 Facebook Annual Report and over 60 million active business pages, targeting accounts and Pages, whether individually or by batches, would have been a mountain to climb to get to any Russian-bought ads. The accounts and Pages which the Russians used to purchase the ads were ingeniously created to look pro-American, obscuring any direct channels to the ads. As an example take the Page, Secured Borders, which has since been taken offline by Facebook and curiously can no longer be viewed using the original google cache link. This particular Page amassed over 100,000 likes and was later discovered to be a Russian-operated contrivance. On its face, Secured Borders kept pounding at the American people in American-toned rhetoric on a core American issue, that of immigration, cementing itself in political discourse as an authoritative voice. As it posted and gained followers the Page evolved to the point where it became indistinguishable from legitimate Pages like Secure Our Borders Now which spoke on the same issue. In turn, the intrinsic inauthenticity of the Page was not made legible, which allowed the Page to freely purchase ads.

What would seem like good avenues to pursue to identify the Russian ads – the amount spent on a single ad, the amount spent overall, the content of the ads, or the nature of the accounts and Pages – were in fact blind alleys since the Russians left no obvious breadcrumbs concerning their involvement or the extent of their interference on Facebook. Evidently well-thought out to avoid detection, the Russian “information, or influence operation” was practically foolproof from start to finish and refused to betray itself, which severely prolonged Facebook’s task of finding the Russian ads. After all, it took Facebook nearly a year after the elections to arrive at conclusive findings that Russia bought ads on its platform. Per the Washington Post, the alleged process that led to the discovery of the Russian ads was the following:

Instead of searching through impossibly large batches of data, Facebook decided to focus on a subset of political ads. Technicians then searched for “indicators” that would link those ads to Russia. To narrow down the search further, Facebook zeroed in on a Russian entity known as the Internet Research Agency, which had been publicly identified as a troll farm. “They worked backwards,” a U.S. official said of the process at Facebook.

The exact details regarding how exactly Facebook “worked backwards” and managed to identify the ads still remain unclear, or have not been disclosed as of yet. “We know that our experience is only a small piece of a much larger puzzle,” Schrage shared.

Kiara Bernard is in her final year at McGill, studying Philosophy, Communications and World Religions.

Edited by Phoebe Warren