What the Rise of Deepfakes Means for the Future of Internet Policies

Three weeks ago, reporter Fred Sassy’s exposé of manipulated videos went viral. His video brought coverage of the dangers of artificial intelligence to the forefront of technological journalism. Interestingly, Fred Sassy himself is a deepfake: a product of the ever-evolving feats of artificial intelligence and machine learning. As science fiction and reality collide, it’s important to understand the consequences of the technologies that we’ve created. Potentially dangerous technologies like deepfakes continue to stay a step ahead of the intelligence employed to detect them and the policies intended to contain them.

Deepfakes have a plethora of applications, not all of which are as innocent as “Sassy Justice,” which is a series of deepfakes hosted by the hyperrealistic Fred Sassy, a fictional reporter from Cheyenne, Wyoming. The series was brought to life by Trey Parker and Matt Stone, the creators of “South Park,” and the actor and voice artist Peter Serafinowicz. The character is a deepfaked version of Donald Trump, but his voice and personality are completely separate from his familiar appearance. The series is created using deep learning, a subset of machine learning, a kind of artificial intelligence.

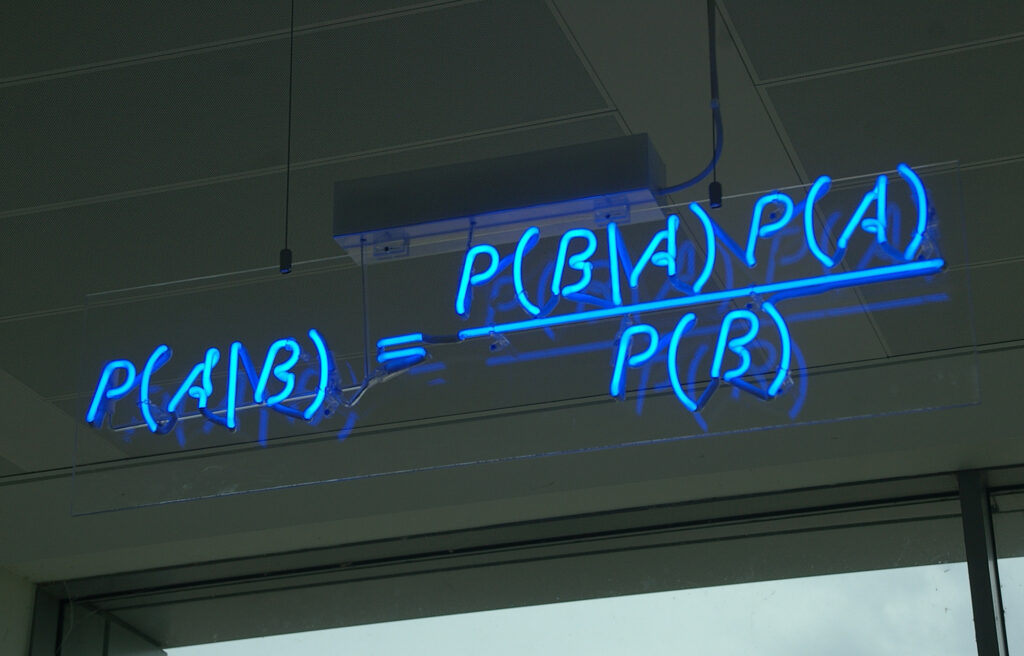

Essentially, artificial intelligence is a form of technology that makes computers behave in ways that could be considered distinctly human, such as being able to reason and adapt. One common kind of artificial intelligence is machine learning, which focuses on using algorithms that can improve their performance through exposure to more data. Deepfakes are created using a theory of machine learning called deep learning. A deep learning program uses many layers of algorithms to create structures called neural networks, inspired by the structure of the human brain. The neural networks aim to recognize underlying relationships in a set of data.

These neural networks have the capacity to create images and videos of people doing and saying things they have never done in real life. As input, the neural network takes large quantities of images or videos to analyze in order to produce an output. The output can be superimposed on other images or videos, or even used to create new ones. Deepfakes open the door for new media like “Sassy Justice,” however, the rise of deepfakes also has detrimental consequences.

After an altered video of US House of Representatives Speaker Nancy Pelosi went viral in the summer of 2019, concern about the use of deepfakes to spread misinformation in the political sphere increased drastically. In reality, 96 per cent of deepfakes are pornographic and used to target women. Many TikTok creators are being used in deepfake porn without their consent. According to Giorgio Patrini, the founder and CEO of the AI research institute Sensity, influencers on platforms like Instagram and TikTok “are more exposed than if they were just uploading pictures” because videos provide more material to make deepfakes. This becomes more alarming when one considers that nearly a third of American TikTok users may be age 14 or under.

In the past, thousands of data points were required to create a deepfake. As a result, the majority of deepfakes featured public figures, like female celebrities and politicians. But now, AI bots on messaging apps like Telegram can be used to create a deepfake from just a single image, making it easier than ever to create nude or sexually explicit images of private individuals. Self-reporting by users of Telegram showed that 70 per cent of targets were private individuals; the photos that were used for their deepfake were taken either from social media accounts or private material. The ease with which people can create these sexually explicit deepfakes of individuals has raised concern amongst AI firms like Sensity over the use of deepfakes in revenge porn: “deepfakes are overwhelmingly being used to harass, humiliate, and even extort women.”

As the creation of deepfakes becomes more accessible for the general public, two distinct problems arise: who is responsible for monitoring deepfakes and how can deepfakes be detected?

Many social media platforms, like Twitter and Reddit, have already implemented policies banning deepfakes. Pornhub also has policies prohibiting people from posting deepfakes, although this is still where most deepfakes can be found. Former Sensity researcher Henry Ajder says: “they’ve basically just banned people from labelling deepfakes. People could still upload them and use a different name.” Companies may have policies in place that allow them to remove deepfakes and even punish users, but there are few legal precedents for companies or individuals to take action against people misusing deepfakes. Although some deepfakes may infringe on copyright laws, data protection laws, or be considered defamatory, they are not in themselves illegal.

However, many countries also have laws that criminalize revenge porn. Yet, these laws do not always allow deepfakes to be classified as revenge porn. In some countries, like Britain, these laws explicitly exclude images that have been created by altering an existing image from those laws, which would include deepfakes. Additionally, images shared on the internet do not abide by international borders; therefore, it can be extremely difficult for governments to implement policies that would grant true justice and protection to citizens from malicious deepfakes. The inconsistency of governmental policies across borders and lack of legal precedents suggests that social media platforms are better equipped to police the misuse of deepfakes on the web.

Yet, the question of how to detect deepfakes is increasingly difficult to answer. According to Nasir Memon, a professor of computer science at NYU: “there’s no money to be made out of detecting [fake media],” which means that few people are motivated to research ways to detect deepfakes. There are some research institutes, like Sensity, that maintain comprehensive databases of fake media and publish research and an API software for detecting deepfakes. Additionally, the Pentagon’s Defense Advanced Research Projects Agency has a media forensics program that has sponsored high-level research in the detection of deepfakes and other AI. Scientists use small clues — blinking patterns, fuzzy edges, distorted lighting — to try and uncover deepfakes. A program that has been fed pictures of only people with open eyes would not realistically create a person who blinks. Unfortunately, as quickly as these tell-tale signs are discovered, deepfake creators can modify their technology to account for the flaws. In short, detection methods cannot keep pace with the advancement of deepfake technology. The shortfalls of deepfake detection may have serious implications. For instance, Australian activist and law graduate Noelle Martin discovered hundreds of deepfakes and sexually explicit images of herself in 2012. The images and videos were online, posted on many different sites and easy to find with a quick Google image search. Friends, families, employers, and strangers alike had access to disturbing and fake imagery of Martin that haunted her for years to come as she worked to criminalize revenge porn in Australia.

The implications of deepfake technology will continue to unravel as the battle between the detection and creation of realistic deep learning models plays out. Only one thing is clear: without better detection methods and more reliable policies, deepfakes pose an unprecedented threat to our perception of the media that defines our world.

Edited by Rebecka Eriksdotter Pieder

Featured Image: “Machine Learning” by Nathalie Redick.